Continuous Discovery Habits: Discover Products that Create Customer Value and Business Value - Teresa Torres

— books — 114 min read

About the book

- Buy on Amazon

- Goodreads reviews

- Pages: 244

Personal summary

Mindsets

- Outcome Oriented, think in outcomes rather than outputs

- Customer Centric: create customer value as well as business value

- Collaborative: leverage the expertise and knowledge of product trio to make decisions

- Visual: draw, externalize your thinking, and map what you know

- Experimental: identify assumptions and gather evidence

- Continuous: infuse discovery continuously throughout your development process

Actions

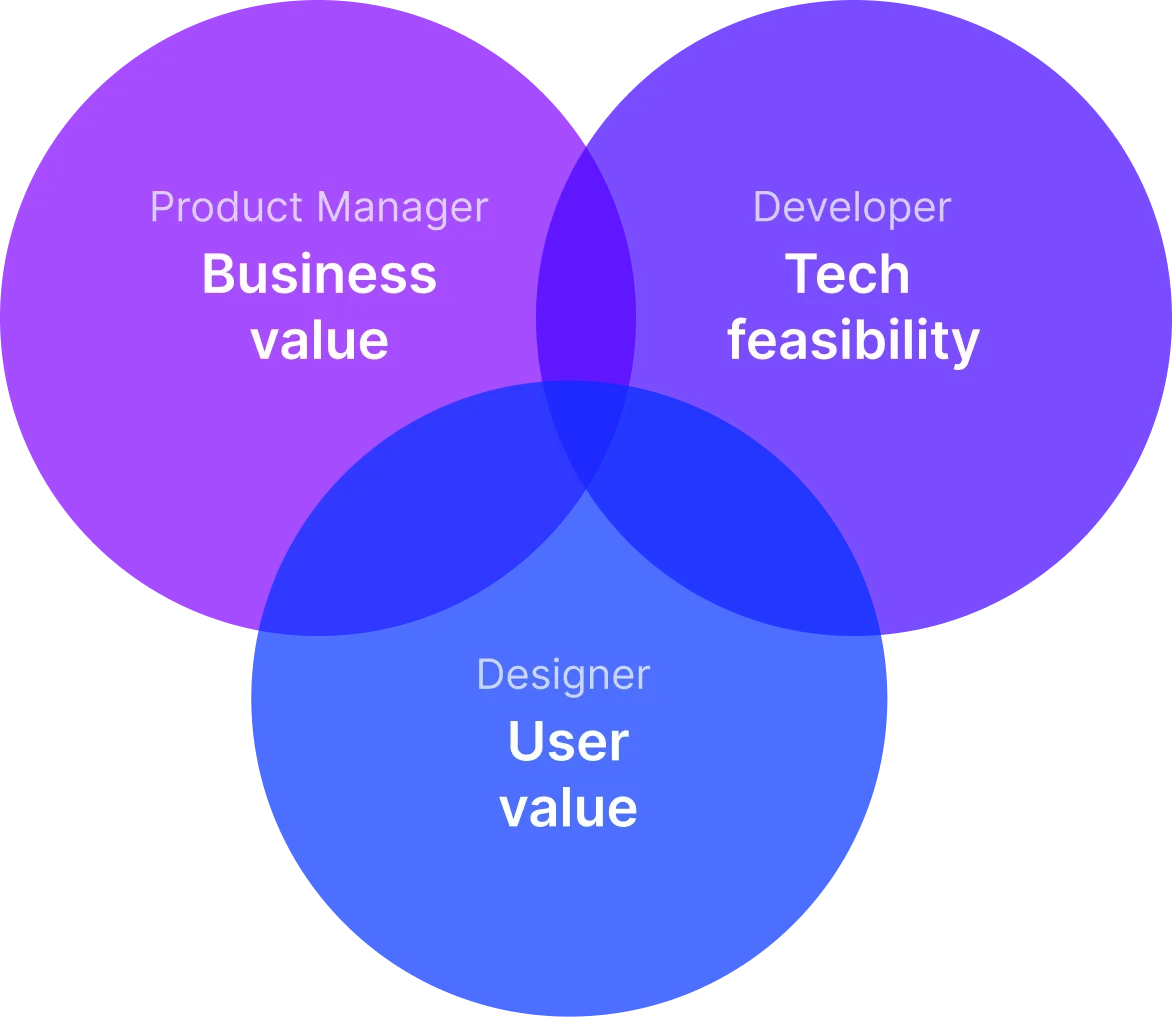

- build product trio: product manager, product designer, software engineer

- build the keystone habit: continuous interviewing weekly

- work backward to find

- product outcome: "If our customers had this solution, what would it do for them?"

- business outcome: "If we shipped this feature, what value would it create for our business?"

- show the work using visualization: boxes and arrows, opportunity solution tree, story maps, interview snapshot

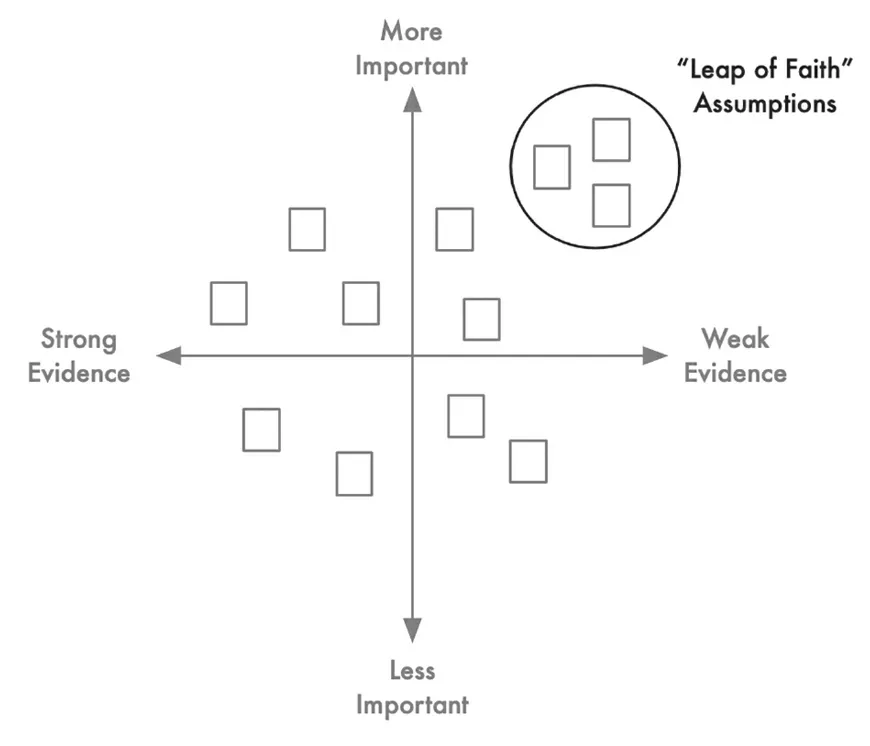

- focus on problems before jumping to solutions, prioritize "leap of faith" assumptions

- start small, interview often, test fast experiments and prototypes before building, iterate

- measure impact, combine qualitative and quantitative data for decisions

Quotes

Part I: What Is Continuous Discovery?

Chapter 1: The What and Why of Continuous Discovery

The Prerequisite Mindsets

1. Outcome-oriented: The first mindset is both a mindset and a habit. You'll learn more about the habit in the coming chapters, but the mindset requires that you start thinking in outcomes rather than outputs. That means rather than defining your success by the code that you ship (your output), you define success as the value that code creates for your customers and for your business (the outcomes). Rather than measuring value in features and bells and whistles, we measure success in impact-the impact we have had on our customers' lives and the impact we have had on the sustainability and growth of our business.

2. Customer-centric: The second mindset places the customer at the center of our world. It requires that we not lose sight of the fact (even though many companies have) that the purpose of business is to create and serve a customer. We elevate customer needs to be on par with business needs and focus on creating customer value as well as business value.

3. Collaborative: The third mindset requires that you embrace the cross-functional nature of digital product work and reject the siloed model, where we hand off deliverables through stage gates. Rather than the product manager decides, the designer designs, and the engineer codes, we embrace a model where we make team decisions while leveraging the expertise and knowledge that we each bring to those decisions.

4. Visual: The fourth mindset encourages us to step beyond the comfort of spoken and written language and to tap into our immense power as spatial thinkers. The habits in this book will encourage you to draw, to externalize your thinking, and to map what you know. Cognitive psychologists have shown in study after study that human beings have an immense capacity for spatial reasoning. The habits in this book will help you tap into that capacity.

5. Experimental: The fifth mindset encourages you to put on your scientific-thinking hat. Many of us may not have scientific training. but, to do discovery well, we need to learn to think like scientists identifying assumptions and gathering evidence. The habits in this book will help you develop and hone an experimental mindset.

6. Continuous: And finally, these habits will help you evolve from a project mindset to a continuous mindset. Rather than thinking about discovery as something that we do at the beginning of a project, you will learn to infuse discovery continuously throughout your development process. This will ensure that you are always able to get fast answers to your discovery questions, helping to ensure that you are building something that your customers want and will enjoy.

Chapter 2: A Common Framework For Continuous Discovery

Begin With the End in Mind

Product Trio

As our product-discovery methods evolve, we are shifting from an output mindset to an outcome mindset. Rather than obsessing about features (outputs), we are shifting our focus to the impact those features have on both our customers and our business (outcomes). Starting with outcomes, rather than outputs, is what lays the foundation for product success.

When a product trio is tasked with delivering an outcome, the business is clearly communicating what value the team can create for the business. And when the business leaves it up to the team to explore the best outputs that might drive that outcome, they are giving the team the latitude they need to create value for the customer.

When a product trio is tasked with an outcome, they have a choice. They can choose to engage with customers, do the work required to truly understand their customers' context, and focus on creating value for their customers. Or they can take shortcuts-they can focus on creating business value at the cost of customers. The organizational context in which the product trio works will have a big impact on which choice the product trio will make. Some teams, however, choose to take shortcuts because they simply don't know another way of working. The framework in this chapter and the habits described in this book will help you resolve the tension between business needs and customer needs so that you can create value for your customers and your business.

The Challenge of Driving Outcomes

Most product trios don't have a lot of experience with driving outcomes. They grew up in a world where they were told what to build. Or they were asked to generate outputs, with little thought for what impact those outputs had. So, when we shift from an output mindset to an outcome mindset, we have to relearn how to do our jobs.

Unfortunately, it's not as simple as talking to customers even week. That's a good start. But we also need to consider the rest of our continuous-discovery definition:

- At a minimum, weekly touchpoints with customer

- By the team building the product

- Where they conduct small research activities

- In pursuit of a desired Outcome

If product trios tasked with delivering a desired outcome want to pursue business value by creating customer value, they'll need to work to frame the problem in a customer-centric way. They'll need to discover the customer needs, pain points, and desires that, if addressed, would drive their business outcome.

To reach their desired outcome, a product trio must discover and explore the opportunity space. The opportunity space, However, is infinite. This is precisely what makes reaching our desired outcome an ill-structured problem. How the team defines and structures the opportunity space is exactly how they give structure to the ill-structured problem of reaching their desired outcome.

The implication for product trios is that two of the most important steps for reaching our desired outcome are first, how we out and structure the opportunity space, and second, how we select which opportunities to pursue. Unfortunately, many product trios skip these steps altogether. They start with an outcome and simply start generating ideas. We do have to get to solutions-shipping code is how we ship value to our customers and create value for our business. But the right problem framing will help to ensure that we explore and ultimately ship better solutions.

The Underlying Structure of Discovery

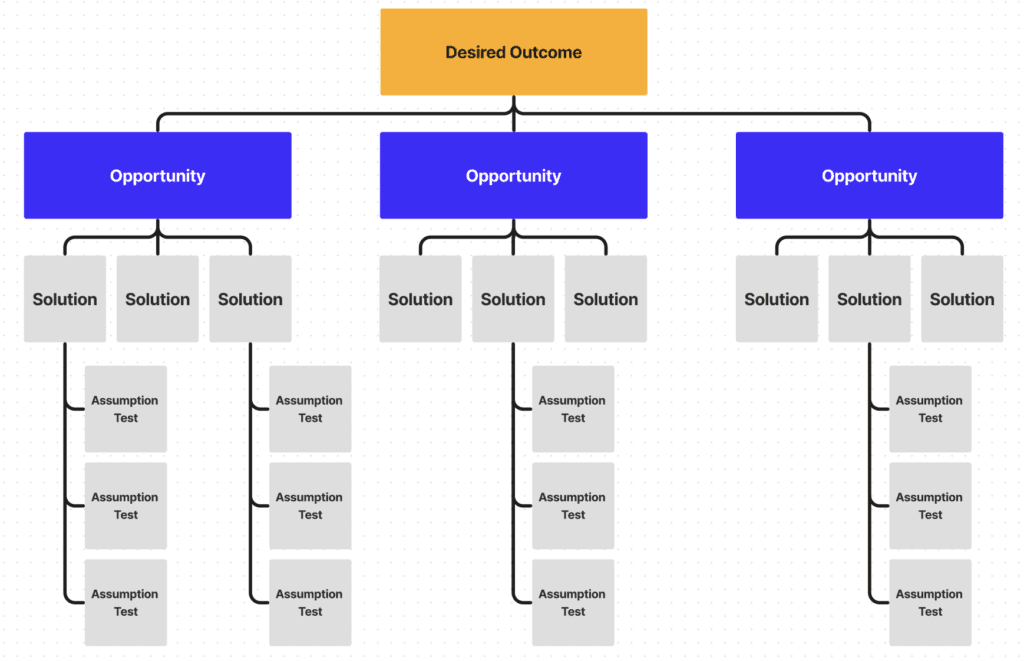

Opportunity Solution Trees

Opportunity Solution Trees (OST) have a number of benefits. They help product trios:

Resolve The Tension Between Business Needs And Customer Need

You start by prioritizing your business need-creating value for your business is what ensures that your team can serve your customer over time. Next, the team should explore the customer needs, pain points, and desires that, if addressed, would drive that outcome. The key here is that the team is filtering the opportunity space by considering only the opportunities that have the potential to drive the business need. By mapping the opportunity space, the team is adopting a customer- centric framing for how they might reach their outcome.

Build And Maintain A Shared Understanding Across Your Trio

For most of us, when we encounter a problem, we simply want to solve it. This desire comes from a place of good intent. We like to help people. However, this instinct often gets us into trouble. We don't always remember to question the framing of the problem. We tend to fall in love with our first solution. We forget to ask, "How else might we solve this problem?"

These problems get compounded when working in teams. When we hear a problem, we each individually jump to a fast solution. When we disagree, we engage in fruitless opinion battles. These opinion battles encourage us to fall back on our organizational roles and claim decision authority (e.g., the product manager has the final say), instead of collaborating as a cross-functional team

When a team takes the time to visualize their options, they build a shared understanding of how they might reach their desired outcome. If they maintain this visual as they learn week over week, they maintain that shared understanding, allowing them to collaborate over time. We know this collaboration is critical to product success.

Adopt A Continuous Mindset

Shifting from a project mindset to a continuous mindset is hard. We tend to take our six-month-long waterfall project, carve it up into a series of two-week sprints, and call it "Agile." But this isn't Agile. Nor is it continuous. A continuous mindset requires that we deliver value every sprint. We create customer value by addressing unmet needs, resolving pain points, and satisfying desires.

The opportunity solution tree helps teams take large, project-sized opportunities and break them down into a series of smaller opportunities. As you work your way vertically down the tree, opportunities get smaller and smaller. Teams can then focus on solving one opportunity at a time. With time, as they address a series of smaller opportunities, these solutions start to address the bigger opportunity. The team learns to solve project-sized opportunities by solving smaller opportunities continuously.

Unlock Better Decision-Making

Instead of framing our decisions as "whether or not" decisions, this book will teach you to develop a "compare and contrast" mindset. Instead of asking, "Should we solve this customer need?" we'll ask, "Which of these customer needs is most important for us to address right now?" We'll compare and contrast our options. Instead of falling in love with our first idea, we'll ask, "What else could we build?" or "How else might we address this opportunity?" Visualizing your options on an opportunity solution tree will help you catch when you are asking a "whether or not" question and will encourage you, instead, to shift to a compare-and-contrast question.

Even with this decision-making framework in hand, you'll still need to guard against overconfidence (the fourth villain of decision-making). It's easy to think that, when you've done discovery well, you can't fail, but that's simply not true (as we'll see in a few stories throughout this book). Good discovery doesn't prevent us from failing; it simply reduces the chance of failures. Failures will still happen. However, we can't be afraid of failure, Product trios need to move forward and act on what they know today, while also being prepared to be wrong. The habits in this book will help you balance having confidence in what you know with doubting what you know, so that you can take action while still recognizing when you are on a risky path.

And finally, we can't talk about decision-making without tackling the dreaded problem of analysis paralysis. Many of the decisions we make in discovery feel like big strategic decisions. That's because they often are. Deciding what to build has a big impact on our company strategy, on our success as a product team, and on our customers' lives. However, most of the decisions that we make in discovery are reversible decisions. If we do the necessary work to test our decisions, we can quickly correct course when we find that we made the wrong decision. This gives us the luxury of moving quickly, rather than falling prey to analysis paralysis.

Unlock Faster Learning Cycles

Many organizations try to define clear boundaries between the roles in a product trio. As a result, some have come to believe that product managers own defining the problem and that designers and software engineers own defining the solution. This sounds nice in theory, but it quickly falls apart in practice.

Best designers evolve the problem space and the solution space together. As they explore potential solutions, they learn more about the problem, and, as they learn more about the problem, new solutions become possible. These two activities are intrinsically intertwined. The problem space and the solution space evolve together.

When we learn through testing that an idea won't work, it's not enough to move on to the next idea. We need to take time to reflect. We want to ask: "Based on my current understanding of my customer, I thought this solution would work. It didn't. What did I misunderstand about my customer?" We then need to revise our understanding of the opportunity space before moving on to ne solutions. When we do this, our next set of solutions get better When we skip this step, we are simply guessing again, hoping that we'll strike gold.

Build Confidence In Knowing What To Do Next

While many teams work top-down, starting by defining a clear desired outcome, then mapping out the opportunity space, then considering solutions, and finally running assumption tests to evaluate those solutions, the best teams also work bottom-up. They use their assumption tests to help them evaluate their solutions and evolve the opportunity space. As they learn more about the opportunity space, their understanding of how they might reach their outcome (and how to best measure that outcome) will evolve. These teams work continuously, evolving the entire tree at once.

They interview week over week, continuing to explore the opportunity space, even after they've selected a target opportunity. They consider multiple solutions for their target opportunity, setting up good "compare and contrast" decisions. They run assumption tests across their solution set, in parallel, so that they don't overcommit to less-than-optimal solutions. All along, they visualize their work on their opportunity solution tree, so that they can best assess what to do next.

Unlock Simpler Stakeholder Management

When it comes to sharing work with stakeholders, product trios tend to make two common mistakes. First, they share too much information- entire interview recordings or pages and pages of notes without any synthesis-expecting stakeholders to do the discovery work with them. Or second, they share too little of what they are learning, only highlighting their conclusions, often cherry-picking the research that best supports those conclusions, In the first instance, we are asking our stakeholders to do too much, and, in the second, we aren't asking enough of them. The key to bringing stakeholders along is to show your work. You want to summarize what you are learning in a way that is easy to understand, that highlights your key decision points and the options that you considered, and creates space for them to give constructive feedback. A well-constructed opportunity solution tree does exactly this.

When sharing your discovery work with stakeholders, you can use your tree to first remind them of your desired outcome. Next, you can share what you've learned about your customer, by walking them through the opportunity space. The tree structure makes it easy to communicate the big picture while also diving into the details when needed. Your tree should visually show what solutions you are considering and what tests you are running to evaluate those solutions. Instead of communicating your conclusions (e.g., "We should build these solutions"), you are showing the thinking and learning that got you there. This allows your stakeholders to truly evaluate your work and to weigh in with information you may not have.

Part II: Continuous Discovery Habits

Chapter 3: Focusing On Outcomes Over Outputs

"An outcome is a change in human behavior that drives business results." - Josh Seiden, Outcomes Over Outputs

"Too often we have many competing goals that all seem equally important." - Christina Wodtke, Radical Focus

When we manage by outcomes, we give our teams the autonomy, responsibility, and ownership to chart their own path. Instead of asking them to deliver a fixed roadmap full of features by a specific date in time, we are asking them to solve a customer problem or to address a business need. The key distinction with this strategy over traditional roadmaps is that we are giving the team the autonomy to find the best solution. If they are truly a continuous-discovery team, the product trio has a depth of customer and technology knowledge, giving them an advantage when it comes to making decisions about how to solve specific problems.

Additionally, this strategy leaves room for doubt. A fixed roadmap communicates false certainty. It says we know these are the right features to build, even though we know from experience their impact will likely fall short. An outcome communicates uncertainty. It says, We know we need this problem solved, but we don't know the best way to solve it. It gives the product trio the latitude they need to explore and pivot when needed. If the product trio they needs with their initial solution, they can quickly shift to a new idea, often trying several before they ultimately find what will drive the desired outcome.

Finally, managing by outcomes communicates to the team how they should be measuring success. A clear outcome helps a team align around the work they should be prioritizing, it helps them choose the right customer opportunities to address, and it helps them measure the impact of their experiments. Without a clear outcome, discovery work can be never-ending, fruitless, and frustrating.

Exploring Different Types of Outcomes

Managing by outcomes is only as effective as the outcomes themselves. If we choose the wrong outcomes, we'll still get the wrong results. When considering outcomes for specific teams, it helps to distinguish between business outcomes, product outcomes, and traction metrics. A business outcome measures how well the business is progressing. A product outcome measures how well the product is moving the business forward. A traction metric measures usage of a specific feature or workflow in the product.

| Business outcome | Measure business value | Retention |

|---|---|---|

| Product outcome | Measure how the product drives business value | Dogs who like the food |

| Traction Metrics | Track usage of specific features | Owners who use the transition calendar |

Business outcomes start with financial metrics (e.g., grow revenue, reduce costs), but they can also represent strategic initiatives (e.g., grow market share in a specific region, increase sales to a new customer segment). Many business outcomes, however, are lagging indicators. They measure something after it has happened. It's hard for lagging indicators to guide a team's work because it puts them in react mode, rather than empowers them to proactively drive results. For Sonja's team, 90-day retention was a lagging indicator of customer satisfaction with the service. By the time the team was able to measure the impact of their product changes, customers had already churned. Therefore, we want to identify leading indicators that predict the direction of the lagging indicator. Sonja's team believed that increasing the perceived value of tailor-made dog food and increasing the number of dogs who liked the food were leading indicators of customer retention. Assigning a team a leading indicator is always better than assigning a lagging indicator.

As a general rule, product trios will make more progress on a product outcome rather than a business outcome. Remember, product outcomes measure how well the product moves the business forward. By definition, a product outcome is within the product trio's span of control. Business outcomes, on the other hand, often require coordination across many business functions.

Assigning product outcomes to product trios increases a sense a product team is assigned a of responsibility and ownership. If business outcome, it's easy for the trio to blame the marketing or customer-support team for not hitting their goal. However, if they are assigned a product outcome, they alone are responsible for driving results. When multiple teams are assigned the same outcome, it's easy to shift blame for lack of progress.

Finally, when setting product outcomes, we want to make sure that we are giving the product trio enough latitude to explore. This is where the distinction between product outcomes and traction metrics can be helpful. It's also a key delineation between an outcome mindset and an output mindset.

When we assign traction metrics to product trios, we run the risk of painting them into a corner by limiting the types of decisions the that they can make. Product outcomes, generally, give product trios far more latitude to explore and will enable them to make the decisions they need to ultimately drive business outcomes. However, there are two instances in which it is appropriate to assign traction metrics to your team.

First, assign traction metrics to more junior product trios. Improving a traction metric is more of an optimization challenge than a wide-open discovery challenge and is a great way for a junior team to get some experience with discovery methods before giving them more responsibility. For your more mature teams, however, stick with product outcomes.

Second, if you have a mature product and you have a traction metric that you know is critical to your company's success, it makes sense to assign this traction metric to an optimization team. For example, Sonja's team may already know that customers want to use the transition calendar-perhaps they use it every day-but the recommended schedule isn't as effective as they hoped it would be. In this case, it might make sense to have a team focused on optimizing the schedule. If the broader discovery questions have already been answered, then it's perfectly fine to assign a traction metric to a team. The key is to use traction metrics only when you are optimizing a solution and not when the intent is to discover new solutions. In those instances, a product outcome is a better fit.

Outcomes Are the Result of a Two-Way Negotiation

Setting a team's outcome should be a two-way negotiation between the product leader (e.g., Chief Product Officer, Vice President of Product, etc.) and the product trio.

The product leader brings the across-the-business view of the organization and should communicate what's most important for the business at this moment in time. But to be clear, the product leader should not be dictating solutions. Instead, the leader should be identifying an appropriate product outcome for the trio to focus on. Outcomes are a good way for the leader to communicate strategic intent.

The product trio brings customer and technology knowledge to the conversation and should communicate how much the team can move the metric in the designated period of time (usually one calendar quarter). The trio should not be required to communicate what solutions they will build at this time, as this should emerge from discovery.

If the business needs the team to have a bigger impact on the outcome, the trio will need to adjust their strategy to be more ambitious, and the product leader will need to understand that more ambitious outcomes carry more risk. The team will need to make bigger bets to increase their chance of success, but these bigger bets typically come with a higher chance of failure. Similarly, the product leader and product trio can negotiate resources (e.g., adding engineers to the team) and/or remove competing tasks from the team's backlog, giving them more time to focus on delivering their outcome.

Encouraging a two-way negotiation between the product leader and the product trio ensures that the right organizational knowledge is captured during the selection of the outcome. It, however, has another benefit. Bianca Green, business faculty at University of Twente (in the Netherlands), and her colleagues found that teams who participated in the setting of their own outcomes took more initiative and thus performed better than colleagues who were not involved in setting their outcomes. This is an area where the research supports industry best practice.

A Guide for Product Trios

Product trios tend to fall into four categories when it comes to setting outcomes:

1. they are asked to deliver outputs and don't work toward outcomes

This is, by far, the most common scenario. When your product leader assigns a new initiative to your product trio, ask your leader to share more of the business context with you. Explore these questions:

- Who is the target customer for this initiative?

- What business outcome are we trying to drive with this initiative?

- Why do we think this initiative will drive that outcome? (Be careful with Why? questions. They can put some leaders on the defensive. Use your best judgment, based on your knowledge of your specific leader.)

Try to connect the dots between the business outcome and potential product outcomes. Can you clearly define how this new initiative will impact a product outcome? Is that outcome a leading indicator of the lagging indicator, business outcome?

2. their product leader sets their outcome with little input from the team

If your product leader is asking you to deliver an outcome with no input from your team, try these tips to shift to a two-way negotiation:

- If you are being asked to deliver a business outcome. try mapping out which product outcomes might drive that business outcome, and get feedback from your leader.

- If you are being asked to deliver a product outcome. ask your leader for more of the business context. Try asking. "What business outcomes are we trying to drive with this product outcome?"

- In either case, clearly communicate how far you think you can get in the allotted time.

3. the product trio sets their own outcomes with little input from their product leader

If your team is setting their own outcome with no input from the product leader, try these tips to shift to a two-way negotiation:

- Before you set your own outcome, ask your product leader for more business context. Try these questions:

- What's most important to the business right now? Try to frame this conversation in terms of business outcomes.

- Is there a customer segment that is more important than other customer segments?

- Are there strategic initiatives we should know about?

Use the information you gain to map out the most important business outcomes and what product outcomes might drive those business outcomes. Get feedback from your leader.

Choose a product outcome that your team has the most influence over.

4. the product trio is negotiating their outcomes with their leaders as described in this chapter

If your product trio is already negotiating outcomes with your product leader, congratulations! However, remember to keep these tips in mind as you set outcomes with your leader:

- Is your team being tasked with a product outcome and not a business outcome or a traction metric?

- If you are being tasked with a traction metric, is the metric well known? Have you already confirmed that your customers want to exhibit the behavior being tracked?

- If it's the first time you are working on a new metric, are you starting with a learning goal (e.g., discover the relevant opportunities) before committing to challenging performance goal?

- If you have experience with the metric, have you set a a specific and challenging goal?

Avoid These Common Anti-Patterns

Pursuing too many outcomes at once

Most of us are overly optimistic about what we can achieve in a short period of time. No matter how hard we work, our companies will always ask more of us. Put these two together, and we often see product trios pursuing multiple outcomes at once. What happens when we do this is that we spread ourselves too thin. We make incremental progress (at best) on some of our outcomes but rarely have a big impact on any of our outcomes. Most teams will have more of an impact by focusing on one outcome at a time.

Ping-ponging from one outcome to another

Because many businesses have developed fire-fighting cultures-where every customer complaint is treated like a crisis-it's common for product trios to ping-pong from one outcome to the next, quarter to quarter. However, you've already learned that it takes time to learn how to impact a new outcome. When we ping-pong from outcome to outcome, we never reap the benefits of this learning curve. Instead, set an outcome for your team, and focus on it for a few quarters. You'll be amazed at how much impact you have in the second and third quarters after you've had some time to learn and explore.

Setting individual outcomes instead of product-trio outcomes

Because product managers, designers, and software engineers typically report up, to their respective departments, it's not uncommon for a product trio to get pulled in three different directions, with each member tasked with a different goal. Perhaps the product manager is tasked with a business outcome, the designer is tasked with a usability outcome, and the engineer is tasked with a technical-performance outcome. This is most common at companies that tie outcomes to compensation. However, it has a detrimental effect. The goal is for the product trio to collaborate to achieve product outcomes that drive business outcomes. This isn't possible if each member is focused on their own goal. Instead of setting individual outcomes, set team outcomes.

Choosing an output as an outcome

Shifting to an outcome mindset is harder than it looks. We spend most of our time talking about outputs. So, it's not surprising that we tend to confuse the two. Even when teams intend to choose an outcome, they often fall into the trap of selecting an output. I see teams set their outcome as "Launch an Android app" instead of "Increase mobile engagement" or "Get to feature parity on the new tech stack" instead of "Transition customer to the new tech stack." A good place to start is to make sure your outcome represents a number even if you aren't sure yet how to measure it. But even then, outputs can creep in. I worked with a team that helped students choose university courses who set their outcome as "Increase the number of course reviews on our platform." When I asked them what the impact of more reviews was, they answered, "More students would see courses with reviews." That's not necessarily true. The team could have increased the number of reviews on their platform, but if they all clustered around a small number of courses, or if they were all on courses that students didn't view, they wouldn't have an impact. A better outcome is "Increase the number of course views that include reviews." To shift your outcome from less of an output to more of an outcome, question the impact it will have.

Focusing on one outcome to the detriment of all else

Like we saw in the Wells Fargo story, focusing on one metric at the cost of all else can quickly derail a team and company. In addition to your primary outcome, a team needs to monitor health metrics to ensure they aren't causing detrimental effects elsewhere. For example, cus. tomer-acquisition goals are often paired with customer-satisfaction metrics to ensure that we aren't acquiring unhappy customers. To be clear, this doesn't mean one team is focused on both acquisition and satisfaction at the same time. It means their goal is to increase acquisition without negatively impacting satisfaction.

Chapter 4: Visualizing What You Know

"Where actual or virtual, an external representation creates common ground..." - Barbara Tversky, Mind in Motion

"If we give each other time to explain ourselves using words and pictures, we build shared understanding." - Jeff Patton, User Story Mapping

When working with an outcome for the first time, it can feel overwhelming to know where to start. It helps to first map out your customers' experience as it exists today. This trio started by mapping out what they thought was preventing their customers from submitting their applications. But they didn't do so by get. ting together in a room to discuss what they knew. Instead, they started out with each product-trio member mapping out their own perspective. This was uncomfortable at first. The designer had little context for what might be going wrong. The engineer had a lot of technical knowledge but had little firsthand contact with customers. The product manager had some hunches as to what was going wrong but didn't have any analytics to confirm those hunches. They each did the best they could.

Once they had each created their individual map, they took the time to explore each other's perspectives. The product manager had the best grasp of the "known" challenges-the customer complaints that made their way to their call center and through support tickets. The designer missed a few steps in the process but did a great job of capturing the confusion and insecurity that the customer might be feeling in the process. Because he was new to the company, he was able to view the application process from an outsider's perspective. The engineer's map accurately captured the process and added detail about how one step informed another step. This uncovered insights into how a customer might get derailed if an earlier step had been completed incorrectly.

Each map represented a unique perspective-together they represented a much richer understanding of the opportunity space they intended to explore. The trio quickly worked to merge their unique perspectives into a shared experience map that better reflected what they collectively knew. Their map wasn't set in stone. They knew that it contained hunches and possibilities, not truth. But it gave them a clear starting point. They had made explicit what they thought they knew, where they had open questions, and what they needed to vet in their upcoming customer interviews.

Set the Scope of Your Experience Map

To get started, you'll want to first set the scope of your experience map. If you start jotting down everything you know about your customer, you'll quickly get overwhelmed. Instead, start with your desired outcome. The trio in the opening story was trying to increase application submissions, so they mapped out what they thought their customers' experience was as they filled out the application. They specifically focused on this question: "What's preventing our customers from completing their application today?" Their outcome constrained what they tried to capture.

Think strategically about how broad or narrow to set the scope. When a team is focused on an optimization outcome, like increasing application submissions, it's fine to define the scope narrowly. However, when working on a more open-ended outcome, you'll want to expand the scope of your experience map.

Start Individually to Avoid Groupthink

It's easy when working in a team to experience groupthink. Groupthink occurs when a group of individuals underperform due to the dynamics of the group. There are a number of reasons for this. When working in a group, it's common for some members to put in more effort than others; some group members may hesitate or even refrain from speaking up, and groups tend to perform at the level of the least-capable member. In order to leverage the knowledge and expertise in our trios, we need to actively work to counter groupthink.

To prevent groupthink, it's critical that each member of the trio start by developing their own perspective before the trio works together to develop a shared perspective. This is counterintuitive. It's going to feel inefficient. We are used to dividing and conquering, not duplicating work. But in instances where it's important that we explore multiple perspectives, the easiest way to get there is for each product-trio member to do the work individually.

Experience Maps Are Visual, Not Verbal

Many of us stopped drawing sometime in elementary school. As a result, we have the drawing skills of a child. This makes drawing uncomfortable. Regardless of how well you draw, drawing is a critical thinking aid that you will want to tap into. Drawing allows us to externalize our thinking, which, in turn, helps us examine that thinking. When we draw an experience map, rather than verbalize it, it's easier to see gaps in our thinking, to catch what's missing, and to correct what's not quite right.

As you get started, you are going to be tempted to describe this context with words. Don't. Language is vague. It's easy for two people to think they are in agreement over the course of a conversation, but, still, each might walk away with a different perspective. Drawing is more specific. It forces you to be concrete. You can't draw something specific if you haven't taken the time to get clear on what those specifics are. Your goal during this exercise is to do the work to understand what you know, not to generalize vague thoughts about your customer. So set aside some time, grab a pen and paper, and start drawing. Push through the discomfort of being a beginner, and you'll be reaping the benefits in no time.

Explore the Diverse Perspectives on Your Team

Take turns sharing your drawings among your trio. As you explore your teammates' perspectives, ask questions to make sure you fully understand their point of view. Give them time and space to clarify what they think and why they think it. Don't worry about what they got right or wrong (from your perspective). Instead, pay particular attention to the differences. Be curious.

When it's your turn to share, don't advocate for your drawing Simply share your point of view, answer questions, and clarify your thinking.

Remember, everyone's perspective can and should contribute to the team's shared understanding. We saw in our opening story that the trio's shared map was stronger because they synthesize the unique perspectives on the team into a richer experience map than any of them could have individually created.

Co-Create a Shared Experience Map

- Start by turning each of your individual maps into a collection of nodes and links. A node is a distinct moment in time, an action, or an event, while links are what connect nodes together. Links help show relationships between the nodes. Links can show the movement through the nodes.

- Create a new map that includes all of your individual nodes. Arrange the nodes from all of your individual maps into a new, comprehensive map.

- Collapse similar nodes together. Many of your individual maps will include overlapping nodes. Feel free to collapse similar nodes together. However, be careful. Make sure you are collapsing like items and not generalizing so much that you lose key detail.

- Determine the links between each node. Use arrows to show the flow through the nodes. Don't just map out the happy path. Remember to capture where steps need to be redone, where people might give up out of frustration, or where steps might loop back on themselves.

- Add context. Once you have a map that represents the nodes and links of your customer's journey, add context to each step. What are they thinking, feeling, and doing at each step of the journey? Try to capture this context visually. It will help the team (and your stakeholders) synthesize what you know, and it will be easier to build on this shared understanding.

Avoid Common Anti-Patterns

- Getting bogged down in endless debate. If you find yourself debating minute details, try to draw out your differences instead of debating them. We often debate details when we already agree. We just don't realize we already agree. When you are forced to draw an idea, you have to get specific enough to define what it is. This often helps to quickly clear up the disagreement or to pinpoint exactly where the disagreement occurs. Drawing really is a magic tool in your toolbox. Use it often.

- Using words instead of visuals. Because many of us are uncomfortable with our drawing skills, we tend to revert back to words and sentences. Instead, use boxes and arrows. Remember, you don't have to create a piece of art. Stick figures and smiley faces are perfectly okay. But drawing engages a different part of your brain than language does. It helps us see patterns that are hard to detect in words and sentences. The more you draw, the more you'll realize drawing is a superpower.

- Moving forward as if your map is true. One of the drawbacks of documenting a customer-experience map is that it can start to feel like truth. Remember, this is your first draft, intended to capture what you think you know about your customer. We'll test this understanding in our customer interviews and again when we start to explore solutions.

- Forgetting to refine and evolve your map as you learn more. It can be easy to think of this activity as a one-time event. However, as you discover more about your customer, you'll want to make sure that you continue to hone and refine this map as a team. Otherwise, you'll find that your individual perspectives will quickly start to diverge even when you are working with the same set of source data. Each person will take away different points from the same customer interview or the same assumption test. You'll want to continuously synthesize what you collectively know so that you maintain a shared understanding of your customer context.

Chapter 5: Continuous Interviewing

Some people say, "Give the customers what they want." But that's not my approach. Our job is to figure out what they're going to want before they do. I think Henry Ford once said, "If I'd asked customers what they wanted, they would have told me, 'A faster horse!" People don't know what they want until you show it to them. That's why I never rely on market research. Our task is to read things that are not yet on the page. - Steve Jobs, CEO of Apple, in Walter Isaacson's Steve Jobs

"Confidence is a feeling, which reflects the coherence of the information and the cognitive ease of processing it. It is wise to take admissions of uncertainty seriously, but declarations of high confidence mainly tell you that an individual has constructed a coherent story in his mind, not necessarily that the story is true." - Daniel Kahneman, Thinking, Fast and Slow

Steve Jobs, the founder and former CEO of Apple, often discounted market research. He argued, "People don't know what they want until you show it to them." Jobs was right. Customers don't always know what they want. Most aren't well-versed in technology. Nor do they have time to dream up what's possible. That's our job. That's what Jobs meant when he said, "Our task is to read things that are not yet on the page." We are the inventors not our customers.

The purpose of customer interviewing is not to ask your customers what you should build. Instead, the purpose of an interview is to discover and explore opportunities. Remember, opportunities are customer needs, pain points, and desires. They are opportunities to intervene in your customers' lives in a positive way.

Steve Jobs knew the importance of discovering opportunities better than most. He and the rest of the Apple team were masters at uncovering unmet needs. When the first iPhone was released in 2007, it wasn't the first smartphone on the market. People resisted the idea of an on-screen keyboard. There were no third-party apps. But even though Apple wasn't first to the market and they launched with a limited feature set, the first iPhone solved several customer needs that other smartphones didn't.

The Challenges With Asking People What They Need

During a workshop, I asked a woman what factors she considered when buying a new pair of jeans. She didn't hesitate to answer. She said, "Fit is my number-one factor." I then asked her to tell me about the last time she bought a pair of jeans. She said, "I bought them on Amazon." I asked, "How did you know they would fit?" She replied, "I didn't, but they were a brand I liked, and they were on sale."

What's the difference between her two responses? Her first response tells me how she thinks she buys a pair of jeans. Her second response tells me how she actually bought a pair of jeans. This is a crucial difference. She thinks she buys a pair of jeans based on fit, but brand loyalty, the convenience of online shopping, and price (or getting a good deal) were more important when it came time to make a purchase.

This story isn't unique. I've asked people these same two questions countless times in workshops. The purchasing factors often vary, but there is always a gap between the first answer and the second. These participants aren't lying. We just aren't very good at understanding our own behavior.

This is exactly why in Thinking, Fast and Slow, behavioral economist Daniel Kahneman claimed, "A remarkable aspect of your mental life is that you are rarely stumped." Your brain will gladly give you an answer. That answer, however, may not be grounded in reality. In fact, Kahneman outlines dozens of ways our brains get it wrong. It's also why Kahneman argues confidence isn't a good indicator of truth or reality. He writes, "Confidence is a feeling, which reflects the coherence of the information and the cognitive ease of processing it." Not necessarily the truth.

As long as your brain can summon a compelling reason, it will feel like the truth-even if it isn't. Gazzaniga's participants thought they knew why they selected the card that they did. The left-brain interpreter filled in the missing details, creating a coherent story. The participant was confident-and, unfortunately, wrong.

Our failure wasn't due to a lack of research. It was because we asked our customers the wrong questions. We built a product based on a coherent story told by both the thought leaders in our space and by our customers themselves. But it wasn't a story that was based in reality. If you want to build a successful product, you need to understand your customers' actual behavior-their reality-not the story they tell themselves.

Too often in customer interviews, we ask direct questions. We ask, "What criteria do you use when purchasing a pair of jeans?" Or we ask, "How often do you go to the gym?" But these types of questions invoke our ideal selves, and they encourage our brains to generate coherent but not necessarily reliable responses. In the coming pages, you'll learn a far more reliable method for learning about your customers' actual behavior.

Distinguish Research Questions From Interview Questions

In any given interview, you'll want to balance broadly exploring the needs, pain points, and desires that matter most to that particular customer and diving deep on the specific opportunities that are most relevant to you. Every customer is unique, and, no matter how well you recruit, you may find that your customer doesn't care about the opportunity you most need to learn about. We don't want to spend time exploring a specific opportunity with a customer if that opportunity isn't important to them. Our primary research question in any interview should be: What needs, pain points, and desires matter most to this customer?

Since we can't ask our customers direct questions about their behavior, the best way to learn about their needs, pain points, and desires is to ask them to share specific stories about their experience. You'll need to translate your research questions into Interview questions that elicit these stories.

Instead of asking, "What criteria do you use when purchasing a pair of jeans?" - a direct question that encourages our participant to speculate about their behavior - we want to ask, "Tell me about the last time you purchased a pair of jeans." The story will help us uncover what criteria our participant used when purchasing a pair of jeans, but because the answer is situated in a specific instance (an actual time when they bought jeans), it will reflect their actual behavior, not their perceived behavior.

You'll want to tailor the scope of the question based on what you need to learn at that moment in time. A narrow scope will help you optimize your existing product. Broader questions will help you uncover new opportunities. The broadest questions might help you uncover new markets. The appropriate scope will depend on the scope you set when creating your experience map.

Excavate the Story

You'll notice, as you excavate the story, that your participant will bounce back and forth between the story they are telling and generalizing about their behavior. You might ask, "What challenges did you face?" and they may respond with, "I usually..." or, "In general, I have this challenge..." You'll want to gently guide them back to telling you about this specific instance. You might say, "In this specific example, did you face that challenge?"

Keep the interview grounded in specific stories to ensure that you collect data about your participants' actual behavior, not their perceived behavior. And remember, like most of the habits in this book, it takes practice. Don't get discouraged. Keep at it. You will get better with time.

You Won't Always Get What You Want

With story-based interviewing, you won't always collect the story that you want. That's okay. The golden rule of interviewing is to let the participant talk about what they care about most. You can steer the conversation in two ways.

First, you decide which type of story to collect. You can ask a more open question like: "Tell me about the last time you watched streaming entertainment." Or you can ask for a more specific story: "Tell me about the last time you watched streaming entertainment on a mobile device."

Second, you can use your story prompts to dig deeper into different parts of the story. If you are primarily concerned with how they chose what to watch, dig into that part of the story. If you aren't particularly interested in what device they watch on, don't ask for that detail if they leave it out of their story. Let your research questions guide your story prompts.

However, even so, you might encounter some participants who simply don't cooperate. They might not have a relevant story. They might be motivated to tell you about a different part of the story. They might not want to tell you a story at all. They might give one-sentence answers. Or they might want to share their feature ideas or gripe about how your product works.

In these instances, you'll want to do the best you can to capture the value the participant is willing to share, but don't force it. You always want to respect what the participant cares about most. Remember, with continuous interviewing, you'll be interviewing another customer soon enough. When we rarely interview, a disappointing interview can feel painful. When we interview continuously, a disappointing interview is easily forgotten.

Synthesize as You Go

Interview Snapshot

An interview snapshot is a one-pager designed to help you synthesize what you learned in a single interview. It's how you are going to turn your copious notes into actionable insights. Your collection of snapshots will act as a reference or index to the customer knowledge bank you are building through continuous interviewing

After you've conducted even a handful of interviews, let alone the dozens you will conduct each year, interviews will start to blur together. You don't want to rely on your memory to keep your research straight. That's the job of an interview snapshot. Snapshots are designed to help you remember specific stories. They help you identify opportunities and insights from each and every interview.

The cliché "A picture is worth a thousand words" is true. The more visual your snapshot, the easier it will be for the team to remember the stories you collected-even weeks or months later. With permission, include a photo of the participant. Grab one from a social-media profile, Grab a screenshot from a video call. Snap a photo during an in-person interview. If your corporate guidelines require that you anonymize your interview data, or if you are interviewing participants about sensitive topics, skip the photo, and replace it with a visual that will help you remember therr specific story. This could be a workplace logo, the car they drive, or even a cat meme that represents their story. The photo should help you put that interview snapshot into context. It should help you remember the stories that you heard.

At the top of the snapshot, include a quote that represents a memorable moment from their story. This might be an emotional quote or a distinct behavior that stood out. Like the photo, the quote acts as a key for unlocking your memory of the specific stories that they told. I can still remember memorable quotes from interviews that I did years ago. A couple of my favorites are "I've worked here for three years. But they feel like dog years." And "I'm old school. Agile doesn't work for me." When a participant uses vivid language, be sure to capture their exact words.

To help put a specific interview into context, you'll want to capture some quick facts about the customer. The quick facts will change from company to company, but they should help you identify what type of customer you were talking to. For example, a service that matches job candidates with companies might segment their employer customers by size (e.g., SMB, enterprise) or they might list average annual contract size. A streaming-entertainment service might list the customer's sign-up date and average hours watched each week. If they segment further, they might even include behavioral traits like binge-watcher or active referrer. The goal of the quick-facts section is to help you understand how the stories you heard in this interview may be similar to or different from those you heard from other customers.

The photo and the memorable quote will act as keys that help you to unlock your memory of the stories you heard. The quick facts help you situate those stories in the right context. Now you want to capture the heart of what you learned. You'll do this by identifying the insights and opportunities that you heard in the interview.

An opportunity represents a need, a pain point, or a desire that was expressed during the interview. Be sure to represent opportunities as needs and not solutions. If the participant requests a specific feature or solution, ask about why they need that, and capture the opportunity (rather than the solution). A good way to do this is to ask, "If you had that feature, what would that do for you?" For example, if an interviewee says, "I wish I could just say the name of the movie I'm searching for," that's a feature request. If you ask, "What would that do for you?" they might respond, "I don't want to have to type out a long movie title." That's the underlying need. The benefit of capturing the need and not just the solution is that the need opens up more of the solution space. We could add voice search to address this need, but we also could auto-complete movie titles as they type.

Opportunities don't need to be exact quotes, but you should frame them using your customer's words. This will help ensure that you are capturing the opportunity from your customer's perspective and not from your company's perspective.

Throughout the interview, you might hear interesting insights that don't represent needs, pain points, or desires. Perhaps the participant shares some unique behavior that you want to capture, but you aren't sure yet what to do with this information. Capture these insights on your interview snapshot. Over time, insights often turn into opportunities.

The goal with the snapshot is to capture as much of what you heard in each interview as possible. It's easy to discount a behavior as unique to a particular participant, but you should still capture what you heard on the interview snapshot. Be as thorough as possible. You'll be surprised how often an opportunity that seems unique to one customer becomes a common pattern heard in several interviews.

Interview Every Week

Weekly interviewing is foundational to a strong discovery practice. Interviewing helps us explore an ever-evolving opportunity space. Customer needs change. New products disrupt markets. Competitors change the landscape. As our products and services evolve, new needs, pain points, and desires arise. A digital product is never done, and the opportunity space is never finite or complete

From a habit standpoint, it's much easier to maintain a habit than to start and stop a habit. If you interview every week, you'll be more likely to keep interviewing every week. Every week that you don't interview increases the chances that you'll stop interviewing alle ether. To nurture your interviewing habit, interview at least one customer every week.

Automate the Recruiting Process

The hardest part about continuous interviews is finding people to talk to. In order to make continuous interviewing sustainable, we need to automate the recruiting process. Your goal is to wake up Monday morning with a weekly interview scheduled without you having to do anything.

When a customer interview is automatically added to your calendar each week, it becomes easier to interview than not to interview. This is your goal.

Recruit Participants While They Are Using Your Product or Service

The most common and easiest way to find interview participants is to recruit them while they are using your product or service. You can integrate a single question into the flow of your product: "Do you have 20 minutes to talk with us about your experience in exchange for $20?" Be sure to customize the copy to reflect the ask- and-offer that works best for your audience. If the visitor answers "Yes," ask for their phone number.

Interview Your Customer Advisory Board

While most product teams worry their customers are too busy to talk with them, for most teams, this won't be true. We dramatically underestimate how much our customers want to help. If you are solving a real need and your product plays an important role in your customers' lives, they will be eager to help make it better. However, there are some audiences that are extremely hard to reach. In these instances, setting up a customer-advisory board will help.

One advantage of interviewing the same customers month over month is that you get to learn about their context in-depth and see how it changes over time. The risk is that you'll design your product for a small subset of customers that might not reflect the broader market. You can pair this recruiting method with one or two of the other methods to avoid this fate.

Interview Together, Act Together

Product trios should interview together. Some teams prefer to let one role, usually the product manager or the designer, be the "voice of the customer." However, our goal as a product trio is to collaborate in a way that leverages everyone's expertise. If one person is the "voice of the customer," that role will trump every other role.

Imagine that a product manager and a designer disagree on how to proceed. The designer has done all the interviewing. It's easy for the designer to argue, "This is what the customer wants." Whether or not that is true, the product manager has no response to that. Designating one person as the "voice of the customer" gives that person too much power in a team decision-making model. The goal is for all team members to be the voice of the customer.

Additionally, the more diverse your interviewing team, the more value you will get from each interview. What we hear in an interview will be influenced by our prior knowledge and experience. A product manager will hear things that an engineer might not pick up on, and vice versa.

Avoid These Common Anti-Patterns

- Relying on one person to recruit and interview participants. To make sure continuous interviewing is a robust habit, make sure everyone on your team is well-versed in recruiting and interviewing.

- Asking who, what, why, how, and when questions. Instead, generate a list of research questions (what you need to learn), and identify one or two story-based interview questions (what you'll ask). Remember, a story-based interview question starts with, "Tell me about a specific time when..."

- Interviewing only when you think you need it. Remember, it's much easier to continue a weekly habit than to start and stop a periodic behavior. Continuous interviewing ensures that you stay close to your customers. More can greatly, continuous interviewing will help to ensure that you can get fast answers to your daily questions.

- Sharing what you learned by sending out pages of notes and/ or sharing a recording. Instead, use your interview snapshots to share what you are learning with the rest of the organization.

- Stopping to synthesize a set of interviews. Instead, we interview every week. Rather than synthesizing a batch of interviews, synthesize as you go, using interview snapshots.

Chapter 6: Mapping The Opportunity Space

The Power of Opportunity Mapping

As you collect customers' stories, you are going to hear about countless needs, pain points, and desires. Our customers' stories are rife with gaps between what they expect and how the world works. Each gap represents an opportunity to serve your cus- tomer. However, it's easy to get overwhelmed and not know where to start. Even if you worked tirelessly in addressing opportunity after opportunity for the rest of your career, you would never fully satisfy your customers' desires. This is why digital products are never complete. How do we decide which opportunities are more important than others? How do we know which should be addressed now and which can be pushed to tomorrow? It's hard to answer either of these questions if we don't first take an inventory of the opportunity space.

Our goal should be to address the customer opportunities that will have the biggest impact on our outcome first. To do this, we need to start by taking an inventory of the possibilities. We should compare and contrast the impact of addressing one opportunity against the impact of addressing another opportunity. We want to be deliberate and systematic in our search for the highest-impact opportunity.

As the opportunity space grows and evolves, we'll have to give structure to it again and again. As we continue to learn from our customers, we'll reframe known opportunities to better match what we are hearing. As we better understand how our customers think about their world, we'll move opportunities from one branch of the tree to another. We'll rephrase opportunities that aren't specific enough. We'll group similar opportunities together. These tasks will require rigorous critical thinking, but the effort will help to ensure that we are always addressing the most impactful opportunity.

Just Enough Structure

One of the biggest challenges with opportunity mapping is that it looks deceptively simple. However, it does require quite a bit of critical thinking. You'll want to examine each opportunity to ensure it is properly framed, that you know what it means, and that it has the potential to drive your desired outcome. If you do your first tree in 30 minutes and think you are done, you are probably not thinking hard enough. However, I also see teams make the opposite mistake. They churn for hours trying to create the perfect tree. We don't want to do that, either.

The key is to find the sweet spot between giving you enough structure to see the big picture, but not so much that you are over- whelmed with detail. Unfortunately, it will take some experience to get this right, as it's a "You'll know it when you see it" type of situation.

"Structure is done, undone, and redone." You should be revisiting your opportunity solution tree often. You'll continue to reframe opportunities as you learn more about what they really mean. Seemingly simple opportunities will subdivide into myriad sub-opportunities as you start exploring them in your interviews. This is normal. You don't have to do all of this work in your first draft. Do just enough to capture what you currently know, and trust that it will continue to grow and evolve over time.

Avoid Common Anti-Patterns

- Opportunities framed from your company's perspective. Product teams think about their product and business all day every day. It's easy to get stuck thinking from your company's perspective rather than your customers' perspective. However, if we want to be truly human-centered, solving customer needs while creating value for the business, we need to frame opportunities from our customers' perspective. No customer would ever say, "I wish I had more streaming-entertainment subscriptions." But they might say, "I want access to more compelling content." Review each opportunity on your tree and ask, "Have we heard this in interviews?" If you had to add opportunities to support the structure of your tree, you might ask, "Can I imagine a customer saying this?" Or are we just wishing a customer would say this?

- Vertical opportunities. Vertical opportunities tend to arise in two situations. One: You hear similar opportunities from several interviews, and each opportunity is really saying the same thing in different words. In this case, simply reframe one opportunity to encompass the broader need, and remove the rest. Otherwise, you're missing sibling opportunities. If each sub-opportunity only partially solves the parent, then identify which sibling opportunities are missing, and fill them in. If you aren't sure what the missing opportunities are, explore the parent opportunity in your upcoming interviews.

- Opportunities have multiple parent opportunities. If your top-level opportunities represent distinct moments in time, then no opportunity should have two parents. If you are finding that an opportunity should ladder up to more than one parent, it's framed too broadly. Get more specific. Define one opportunity for each moment in time in which that need, pain point, or desire occurs.

- Opportunities are not specific. Opportunities that represent themes, design guidelines, or even sentiment, aren't specific enough. "I wish this was easy to use," "This is too hard," and "I want to do everything on the go" are not good opportunities. However, if we make them more specific, they can become good opportunities "I wish finding a show to watch was easier," "Entering a movie title using the remote is hard," and "I want to watch shows on my train commute" are great opportunities.

- Opportunities are solutions in disguise. Often in an interview, your customer will ask for solutions. Sometimes they will even sound like opportunities. For example, you might hear a customer say, "I wish I could fast-forward through commercials." You might be tempted to capture this as an opportunity. However, this is really a solution request. The easiest way to distinguish between an opportunity and a solution is to ask, "Is there more than one way to address this opportunity?" In this example, the only way to allow people to fast-forward through commercials is to offer a fast-forward solution. This isn't an opportunity at all. Instead, we want to uncover the implied opportunity. Maybe it's as simple as, "I don't like commercials." Why does this reframing help? If we then ask, "How might we address 'I don't like commercials'?" we can generate several options. We can create more entertaining commercials-like those we see during the Super Bowl. We can allow you to fast-forward through commercials like the customer suggested. Or we can offer a commercial-free subscription. An opportunity should have more than one potential solution. Otherwise, it's simply a solution in disguise.

Capturing feelings as opportunities. When a customer expresses emotion in an interview, it's usually a strong signal that an opportunity is lurking nearby. However, don't capture the feeling itself as the opportunity. Instead, look for the cause of the feeling. When we capture opportunities like "I'm frustrated" or I'm overwhelmed," we limit how we can help. We can't fix feelings. But if we capture the cause of those feelings-"I hate typing in my password every time I purchase a show" or "I'm way behind on this show"-we can often identify solutions that address the underlying cause. So, do note when a customer expresses a feeling, but consider it a signpost, and remember to let it direct you to the underlying opportunity.

Chapter 7: Prioritizing Opportunities, Not Solutions

For too long, product teams have defined their work as ship ping the next release. When we engage with stakeholders, we talk about our roadmaps and our backlogs. During our performance reviews, we highlight all the great features we implemented. The vast majority of our conversations take place in the solution space We assume that success comes from launching features. This is what product thought leader Melissa Perri calls "the build trap".

This obsession with producing outputs is strangling us. It's why we spend countless hours prioritizing features, grooming backlogs, and micro-managing releases. The hard reality is that product strategy doesn't happen in the solution space. Our customers don't care about the majority of our feature releases. A solution-first mindset is good at producing output, but it rarely produces outcomes.

Instead, our customers care about solving their needs, pain points, and desires. Product strategy happens in the opportunity space. Strategy emerges from the decisions we make about which outcomes to pursue, customers to serve, and opportunities to address. Sadly, the vast majority of product teams rush past these decisions and jump straight to prioritizing features. We obsess about the competition instead of about our customers. Our strategy consists of playing catch-up, and, no matter how hard we work, we always seem to fall further and further behind.

Focus on One Target Opportunity at a Time

In the Opportunity Mapping chapter (Chapter 6), you learned that, as you work vertically down the opportunity solution tree, you are deconstructing large, intractable opportunities into a series of smaller, more solvable sub-opportunities. The benefit of this work is that it helps us adopt an Agile mindset, working iteratively, delivering value over time, rather than delivering a large project after an extended period of time.

By addressing only one opportunity at a time, we unlock the ability to deliver value iteratively over time. If we spread ourselves too thin across many opportunities, we'll find ourselves right back in the waterfall mindset of taking too long to deliver too much all at once. Instead, we want to solve one opportunity before moving on to the next.

Assessing a Set of Opportunities

1. Opportunity sizing helps us answer the questions: How many customers are affected and how often? However, we don't need to size each opportunity precisely. This can quickly turn into a nev- er-ending data-gathering mission. Instead, we want to size a set of siblings against each other. For each set that we are considering. we want to ask, "Which of these opportunities affects the most customers?" and "the most often?" We can and should make rough estimates here.

2. Market factors help us evaluate how addressing each opportunity might affect our position in the market. Depending on the competitive landscape, some opportunities might be table stakes, while others might be strategic differentiators. Choosing one over the other will depend on your current position in the market. A missing table stake could torpedo sales, while a strategic differentiator could open up new customer segments. The key is to consider how addressing each opportunity positions you against your competitors.

3. Company factors help us evaluate the strategic impact of each opportunity for our company, business group, or team. Each organizational context is unique. Google might choose to address an opportunity that Apple would never touch. We need to consider our organizational context when assessing and prioritizing opportunities. We want to prioritize opportunities that support our company vision, mission, and strategic objectives over opportunities that don't. We want to de-prioritize opportunities that conflict with our company values.

Across all three levels-company, business group, and team- ket you'll also want to consider strengths and weaknesses. Some companies will be better positioned to tackle some opportunities over others. Some teams may have unique skills that give them an unfair advantage when tackling a specific opportunity. We want to take all of this into account when assessing and prioritizing opportunities.

4. Customer factors help us evaluate how important each opportunity is to our customers. If we interviewed and opportunity mapped well, every opportunity on our tree will represent a real customer need, pain point, or desire. However, not all opportunities are equally important to customers. We'll want to assess how important each opportunity is to our customers and how satisfied they are with existing solutions. We want to prioritize important opportunities where satisfaction with the current solution is low, over opportunities that are less important or where satisfaction with current opportunities is high.

Embrace the Messiness

When we turn a subjective, messy decision into a quantitative math formula, we are treating an ill-structured problem as if it were a well-structured problem. The problem with this strategy is that it will lead us to believe that there is one true, right answer. And there isn't. Once we mathematize this process, we'll stop thinking and go strictly by the numbers. We don't want to do this.

Instead, we want to leave room for doubt. As Karl Weick, an educational psychologist at the University of Michigan, advises in the second opening quote, wisdom is finding the right balance between having confidence in what you know and leaving enough room for doubt in case you are wrong.

Avoid These Common Anti-Patterns

Delaying a decision until there is more data. We do want to be data-informed. But we also want to move forward. We'll learn more from testing our decisions than we will from trying to make perfect decisions. The best way to prevent this type of analysis paralysis is to time-box your decision. Give yourself an hour or two or, at most, a day or two. Then decide, based on what you know today, and move on. Trust that you'll course-correct if you get data down the road that tells you that you made a less-than-optimal decision.

Over-relying on one set of factors at the cost of the others. Some teams are all about opportunity sizing. Others Focus exclusively on what's most important to their customers. Many teams forget to consider company factors and choose opportunities that will never get organizational buy-in. The four sets of factors (opportunity sizing, market factors, company factors, and customer factors) are designed to be lenses to give you a different perspective on the decision. Use them all.

Working backwards from your expected conclusion. Some teams go into this exercise with a conclusion in mind. As a result, they don't use the lenses to explore the possibilities and instead use them to justify their foregone conclusion. This is a waste of time. Go into this exercise with an open mind. You'll be surprised by how often you come away from it with a new perspective.

Chapter 8: Supercharged Ideation

"Creative teams know that quantity is the best predictor of quality."- Leigh Thompson, Making the Team

"You'll never stumble upon the unexpected if you stick only to the familiar." - Ed Catmull, Creativity, Inc.

Quantity Leads to Quality

For many of us, brainstorming seems unnecessary. We hear about a customer problem or need, and our brain immediately jumps to a solution. It's human nature. We are good at closing the loop-we hear about a problem, and our brain wants to solve it However, creativity research tells us that our first idea is rarely our best idea. Researchers measure creativity using three primary criteria: fluency (the number of ideas we generate), flexibility (how diverse the ideas are), and originality (how novel an idea is).

Similar research shows that fluency is correlated with both flexibility and originality. In other words, as we generate more ideas, the diversity and novelty of those ideas increases. Additionally, the most original ideas tend to be generated toward the end of the ideation session. They weren't the first ideas we came up with. So even though our brain is very good at generating fast solutions, we want to learn to keep the loop open longer. We want to learn to push beyond our first mediocre and obvious ideas, and delve into the realm of more diverse, original ideas.

Now, not all opportunities need an innovative solution. You don't need to reinvent the "forgot password" workflow (but you should still test it-more on that in Chapter 11). But for the strategic opportunities where you want to differentiate from your how competitors, you'll want to take the time to generate several ideas to ensure that you uncover the best ones.

The Problem With Brainstorming